- Time

- Post link

People are pulling footage from Blade Runner, Dune and other films, and stuff they have shot themselves and cobbling it all together, or mixing SE and LD footage together etc.

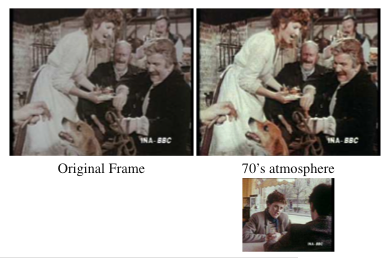

Colour matching can be a pain in the arse, so I just thought I'd throw in a method that can be used pretty much automatically, even if the scenes are very different.

This would allow you (for example) to take the colour scheme from a room in Blade Runner and match it to a room from Revenge of the Sith, or colour match the entire SE to match one of the LD releases.

The crux of the method is to get the probability density function from one image and to apply it to the other. Obviously a one dimensional pdf isn't going to be a very good match with two very differrent images, but if you feed the results of one back into the equation then you get it moving towards convergence, and can in fact get very close to an exact match. (i.e. use it iteratively) It isn't very computationally intensive and can achieve amazing results.

If anyone is interested to give it a go I'll post some reference papers and the math.