ChainsawAsh said:

What you see in a theater on opening day, the first (or second, or third) time a print is run, is probably somewhere between 2K-4K quality.

Today, though, any film print will have been made from a 2K or 4K DI anyway, so it doesn't really matter. If you're only concerned about the most detail, see it digitally with a 4K projector.

I, however, prefer seeing something that was shot on film projected on film, for aesthetic reasons, regardless of whether or not the 4K digital theater across the hall has more detail or not. If it was shot digitally, it should be seen digitally.

Basically, I want to see it as close to the way it was shot as possible. Which is why I don't see films in IMAX unless, like The Dark Knight, at least part of the film was shot on IMAX.

But if you want to capture Star Wars the way it was seen in theaters in 1977, it's likely to be somewhere between 2K and 4K quality. Unless you saw it in 70mm, in which case it was probably above 4K quality.

You refuse to see films in IMAX but you think Star Wars in 70mm has better quality? Imax and 70mm are the same principle. In both case you are seeing a 35mm blown up to a duplicate format approximately double its original size. And in both cases, I doubt you actually gain any resolution.

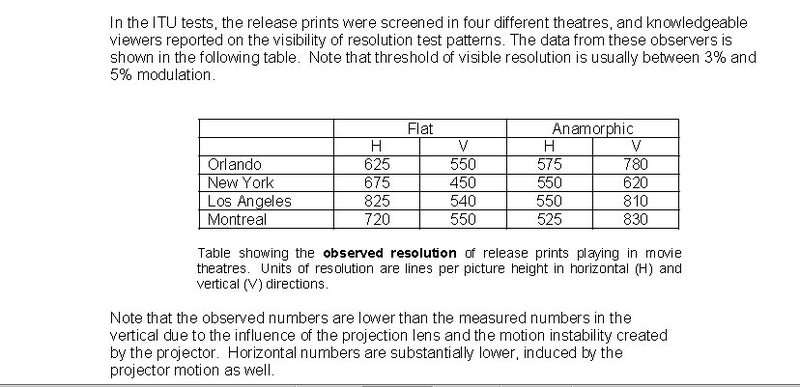

As for the argument for digital projection--Cameron is right there. Most prints you see are not in 4K when first run. Resolution does not degrade the more a print is run, either a print will resolve four thousand lines of resolution or it won't, and the fact is that a print will never, ever give you 4K resolution. It is roughly HD resolution, and in many cases between 720 and 1080 resolution. There were studies conducted by a European cinema group [EDIT: I think that is what was linked above] and they did tests across dozens of theatres with different subjects and they found on average viewers could only discern 800 lines of resolution in a print. Now, I take issue with this, as I have seen prints that have more picture information in them than their HD counterparts, so this tells me that prints can outperform HD. However, not all prints are the same--even the reels differ. Some reels are sharp and some are soft within the same film. This is because it varies as they are printed, due to light pollution and registration among other things. I would say that in a best case scenario if you saw a perfect print from a limited-run (which, because they make only a couple hundred prints and not 50,000, tend to be much better quality) it would be in the 2K range. But otherwise, your typical theatrical print could not possibly be in the 4K range, just because it is not possible to retain that much information when it has been (at least) three generations old.

However, as I mentioned previously, digital projection often tends to "look" digital, you can see the video artifacts, so this is why I prefer film most the time. Its not because it is digital per se, if you saw it from the DI itself it would look great, it's the HD downconvert and the projectors used. When I saw Inception last week it looked bad, even though the resolution was about the same as a film print. However, when I saw the Final Cut of Blade Runner in 2007, it was one of the best looking projections I have seen in my entire life, because it was from a premium projector in a single-screen theatre, not those typical multiplex ones. Maybe it was a 4K projector, but I don't know if any commercial films were being shipped to theatres in 4K. Also though, and I will get into this as it relates to film, just because something is being projected in 1920x1080 doesn't mean that's what the resolution is. That is the size of the image, true, but film and video aren't measured the same way because film has no pixel resolution, you have to measure resolving power of both. A lot of HD-downconverts for multiplexes simply do not have a thousand lines of verticle resolution when projected. The picture may technically be that pixel size, but if the movie was precursed with a lens chart you may discover that it only resolves about 800 lines. The digital projection of Inception I saw might have been such a case. That's why it is misleading to just look at resolution and say "this number is bigger", because resolving power is different than resolution and film has no fixed pixel size so that is what you will be comparing--what actually ends up being captured.

On a similar train of thought, you also have to keep in mind that while 35mm films can resolve more than 4K resolution, in a practical sense they often don't. The convention wisdom is that 35mm films resolves about five thousand lines. But film isn't like digital video, where the resolution is fixed--the resolving power of film depends on what you put into it. There are two main factors that contribute to this: 1) lens, and 2) film stock. If you shoot on a 500 ASA stock with a 200mm zoom lens, you aren't going to get more than 2K on the negative. But if you shoot on a 100ASA fine-grain stock with something like a 50mm Cooke S4 prime, you will probably get all five thousand glorious lines of resolving power on that negative.

However, stock and lenses today are not at all the same as the ones from eras past. In fact, in the 1990s, film stock became so sharp that cinematographers started complaining it was beginning to look like video, which is part of what prompted the move to grainy, coarser looks like Saving Private Ryan. And the lens technology of the last 20 years is absolutely incredible--with stuff like the Cooke S4 series, the sharpness you get is just incredible. So, a movie from the 1970s like Star Wars, because it was shot on older lenses and stocks that aren't as clear and defined as today, might only pick up 4K picture information in the best of instances. It's hard to say without examining the negative itself, but that is generally what should be the case.

That's why you can't say "film=[this resolution]". Maybe it does for one film, but on another film it will be totally different. 35mm has no fixed resolution, the resolution is the lens and the stock and in ideal conditions with modern stocks and lenses it performs at about five thousand lines of resolving power but in practice it will fluxuate depending on the production and even the shot.

Also bear in mind that most films are done in 4K D.I.s these days so even in the rare case where you exceeded 4K resolution, the actual negative you print out will be 4K resolution because that is what the camera negs were scanned at. So, cool you used the best lenses and stocks and I count 5500 lines on the lens chart, that is great, but the negative becomes a 4K digital file so you threw away the difference, and then prints and downconversions just get degraded from there. In most cases though, an 8K scan is unnecessary. In the traditional world, also, if we doing straight film to film, the negative is not the completed movie, it would have no colour correction, and any opticals would already be second generation, so by the time your pristine Interpositive is printed it's a mix of second and third generation film material and probably only shows just above 2K resolution.

So, uh, I've gone on an aimless tangent here, but what I'm trying to say is that Cameron both has a point, because pixel count ("resolution"...which really isn't an accurate term in the sense that we are using it) isn't everything, and his notion of frame rate and perceived resolution is interesting and probably correct, but I don't think anyone that worked with film would say HD can beat 35mm film. I did a bunch of tests in 2007 using the camera Attack of the Clones was shot on, among others, and 35mm and the difference was night and day. That was 2007 though and things have improved greatly since then, but I still find it hard to believe. Chainsaw Ash's own tests seem to confirm my suspicions.